Project Overview

This project involves training a reinforcement learning (RL) agent to play the game *Cuphead*, specifically to defeat the Root Pack boss fight. I used a YOLO object detection model to analyze the game's screen in real-time, then fed the data through a Deep-Q Network (DQN) which learns to make optimal decisions and execute inputs based on the observed state of the game. The project aims to explore the challenges of training AI in fast-paced, visually complex environments such as video games.

Tech Stack

Key Features

Deep Q-Learning

Implemented a deep Q-network that learns optimal action policies through trial and error, with experience replay to improve stability.

Object Detection

Used OpenCV fed into a YOLO object detection model for real-time game state detection, including boss positions, projectiles, and player health.

Performance Metrics

Developed custom reward functions and evaluation metrics to track agent improvement over time.

Imitation Learning

Incorporated human gameplay demonstrations to accelerate learning and overcome exploration challenges.

Videos

Watch CupBot in action and implementation:

CupBot AI beats The Root Pack (No Talking)

CupBot AI Implementation

Training Results

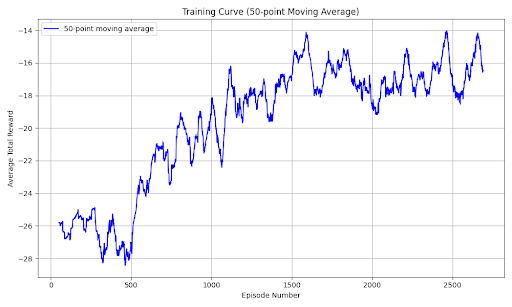

The graph below shows the agent's reward improvement over training episodes:

CupBot's 50-episode moving average reward progression during training